HENSOR

Heritage Enrichment using Spatial Object Relations

The aim of this FED-tWIN project is to investigate whether automatic image recognition, with a focus on spatial relations between objects, can be used within the collection registration, in making collections searchable and in art history research.

Can computer vision contribute to the traditional, still largely analogue, research practice within art sciences? Does digital collection registration and access to collection data allow for automation? Can the public, through the use of natural language processing techniques, get to know the collection pieces in a more intuitive way?

A state-of-the-art research on the aforementioned themes, with practical application to the RMFAB collection data, will be followed by the development of usable tools that can be actively deployed within museum and public activities.

HENSOR is a FED-tWIN project supported by BELSPO.

Heritage Enrichment Pose Search

This project work focuses on creating a visual search engine for the automatic enrichment of art collections, streamlining the retrieval process via pose based search.

Results

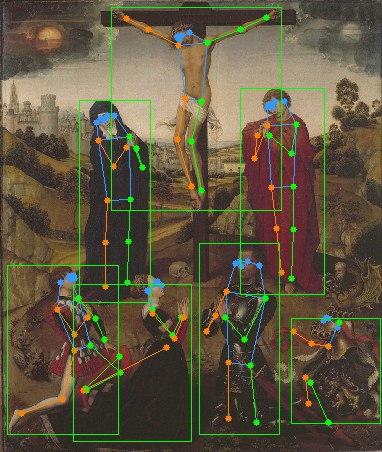

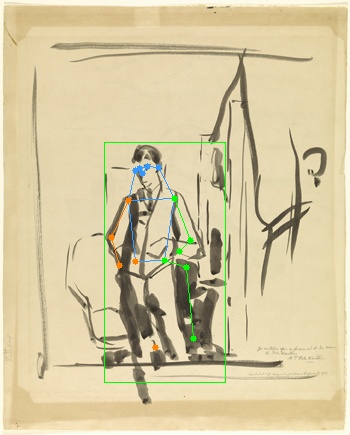

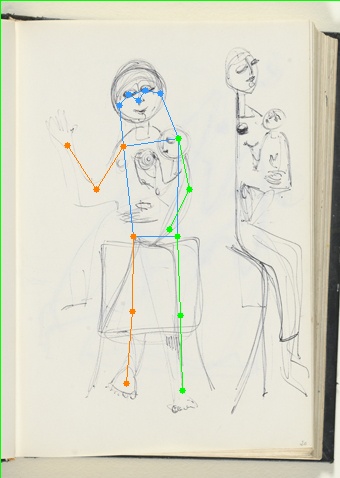

Some of the successful keypoints detection by MMPose are shown below:

However, the model failed to detect keypoints accurately in case of drawings, battle scenes consisting of multiple people. Drawings, as hand-drawn sketches lack well-defined body structures, often disjoint body parts, displaying too much abstraction provided hurdles for the neural network, resulting in less detectable poses. However, we plan to construct a training pipeline using MMPose on such drawings providing ground truth pose keypoints to improve the results. Alternatively, using background removal techniques before pose estimation on such drawings might as well improve the results.

Boeketje Kunst

In this project, users can create their own unique bouquet with flowers from famous paintings from the Royal Museums of Fine Arts of Belgium. Select individual flowers using our advanced object detection and segmentation models and bundle them together into a personalized composition.

Working

To achieve this end result we used advanced techniques such as object detection and segmentation. First we pre-processed the paintings to bring the flowers more to the foreground. We then used the YOLOv8 object detection model, which we trained ourselves with our own annotated dataset, to detect individual flowers in the paintings. The bounding boxes generated by the YOLOv8 model were passed to Facebook’s advanced Segment Anything Model to segment the flowers from the painting.

The masks that the Segment Anything Model generates allowed us to accurately segment each flower so you can choose which ones to include in your bouquet. To put the flowers together in a beautiful bouquet, we used the popular openCV library with which we wrote scripts that put the segmented flowers in the right place. We also generated vases using text-to-image generative AI models and backgrounds using the same AI models, making the entire process automated and seamless.

Results